It is 6:30 PM on Christmas Eve in England. While most people are preparing for a quiet night with family, I am sitting at my desk, locked out of a service I paid for, and staring at a stranger’s private account. This isn’t just a minor technical “hiccup.” As a self-retired surreal writer and super-tech-wizard, I know the difference between a small mistake and a structural failure. What I am looking at right now is a structural failure in the way AI companies handle our identity and security

Suno AI has quickly become a leader in the world of generative music, but today, they proved that their backend security is built on shaky ground. My experience over the last 12 hours has revealed a critical flaw in how they map user accounts to phone numbers, and it’s a warning every subscriber needs to hear.

The Foundation: A Premium Subscription

My day started productively. I am a Suno Premier subscriber. I recently upgraded my account. I had 10,000 tokens ready to use and had spent the morning writing a new song. I treated this like any other professional job: I did the work, I paid the fee, and I expected the tools to be ready for use. However, when I tried to log back in this afternoon to finish my work, the “foundation” of the service completely gave way.

The Failure of the Gateways: Ding Ding vs. Clerk

Usually, when I log in to Suno on my desktop using Microsoft Edge or Google Chrome, the process is simple. I enter my phone number, and a verification code (OTP) arrives via a text message gateway identified as “Ding Ding.” It’s a standard, reliable route that has never failed me before But today, the desktop site went silent. No matter how many times I requested a code, nothing arrived.

The system was essentially “broken.” As a surreal fiction writer, you learn that when your primary system fails, you don’t switch to a backup. In this case, I tried the mobile app, it was the Suno Android app. When I requested a code through the app, it finally arrived—but the sender was different. Instead of “Ding Ding,” the message came from a gateway called “Clerk.” This was the first red flag. It indicated that Suno was failing over to a secondary routing system, and clearly, that system wasn’t synced with their main database.

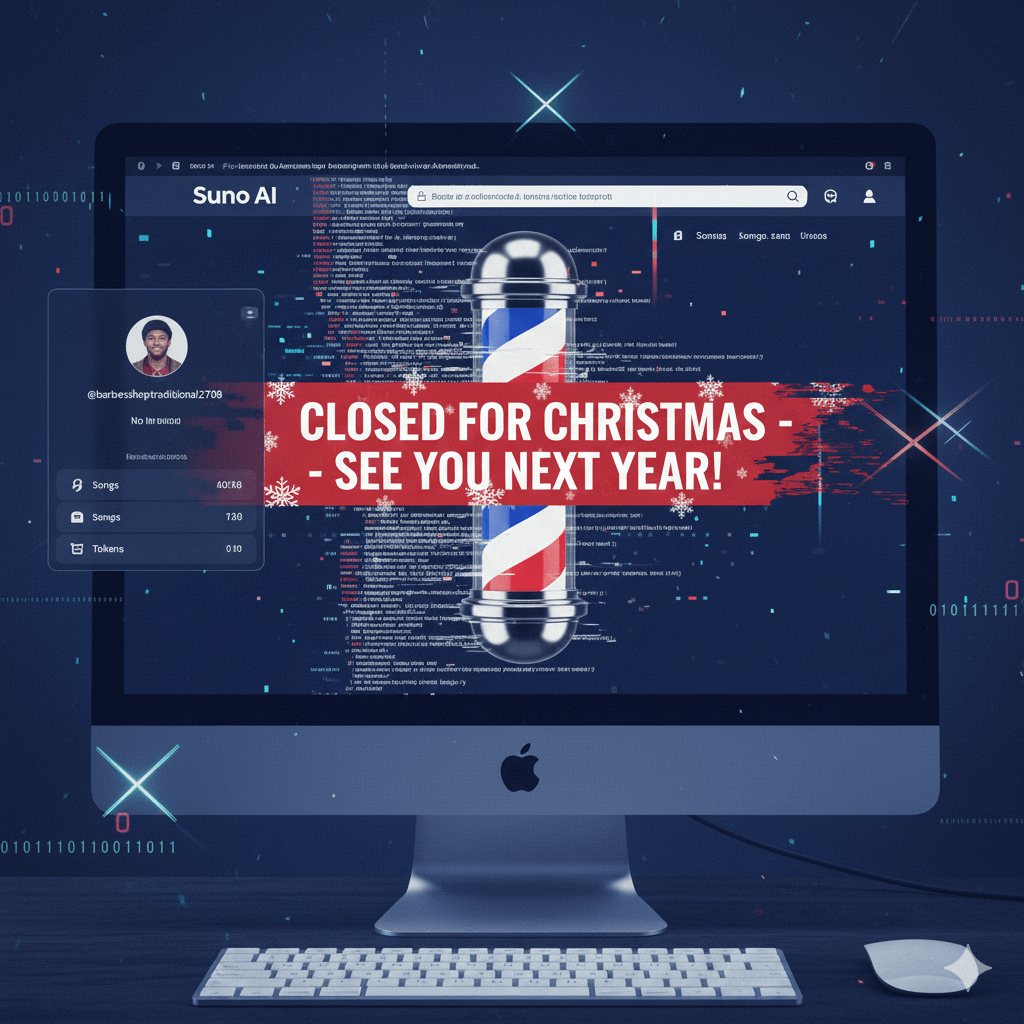

Entering the Twilight Zone: The Barbershop Account

I entered the code from “Clerk,” expecting to see my library of 25 songs and my 10,000 tokens. Instead, I was logged into a stranger’s account. The display name on the screen was “@barbershoptraditional2708.”

I was suddenly in a “ghost” account. There were no songs, no followers, and most importantly, none of my paid credits. Because of a routing error between two different SMS providers, Suno’s backend had cross-wired my phone number with someone else’s User ID

The Holiday Support Void

I immediately did what any responsible user would do, I put the kettle on, then I documented the error and contacted support. I emailed both billing@suno.com and support@suno.com, attaching my receipt and the screenshots of the “Barbershop” account.

The problem, of course, is the timing. It is Christmas Eve. The human staff at Suno have likely left the office for the holidays, leaving their automated systems to run—and in my case, fail—without oversight. Based on community reports, the turnaround for support tickets can be anywhere from a few days to two weeks

For a Premier subscriber, being locked out of 10,000 tokens for two weeks is unacceptable. It’s half of the monthly value I paid for

Moving to Higher Ground: Discord

To bypass this mess, I have now set up a verified account on Discord. Unlike the shaky ground of SMS gateways like “Clerk” and “Ding Ding,” Discord uses a verified email “handshake” that is much more stable.

I have instructed Suno to move my subscription and library to this new Discord login. By doing this, I am removing the phone number variable from the equation entirely. I am taking control of my own “rescue” because the company’s automated systems are clearly not up to the task.

A Warning to the AI Community

This experience highlights a growing problem in the AI industry. These companies are growing so fast that their security infrastructure can’t keep up. They rely on third-party gateways to handle our most sensitive data—our identities and our money—and when those gateways fail, the user is the one who pays the price.

If you are a Suno user, I urge you to look into alternative login methods like Discord or Google/Microsoft SSO. Don’t rely on the SMS “Ding Ding” or “Clerk” systems. They are currently cross-wired, and you might find yourself, like me, staring at a “Barbershop” instead of your own hard work

Final Thoughts

I am still waiting for Suno to fix this. My songs are in limbo, and my credits are missing. But I have documented every step, and I have the “paper trail” to prove it. Whether you’re Developing rude AI that “accidently adds your professions to the world, or writing a song to use with Suno, the rules are the same: Check your lines, verify your foundation, and always have backup emergency tea bags. So, zero song creating for me over Christmas, unless SUNO care at all. They are eating into my month’s subscription, and worst of all, I spent days writing a special song for Christmas for all my family. Suno ruined Christmas for Grandma!

Leave a comment